Tensorflow

This is a

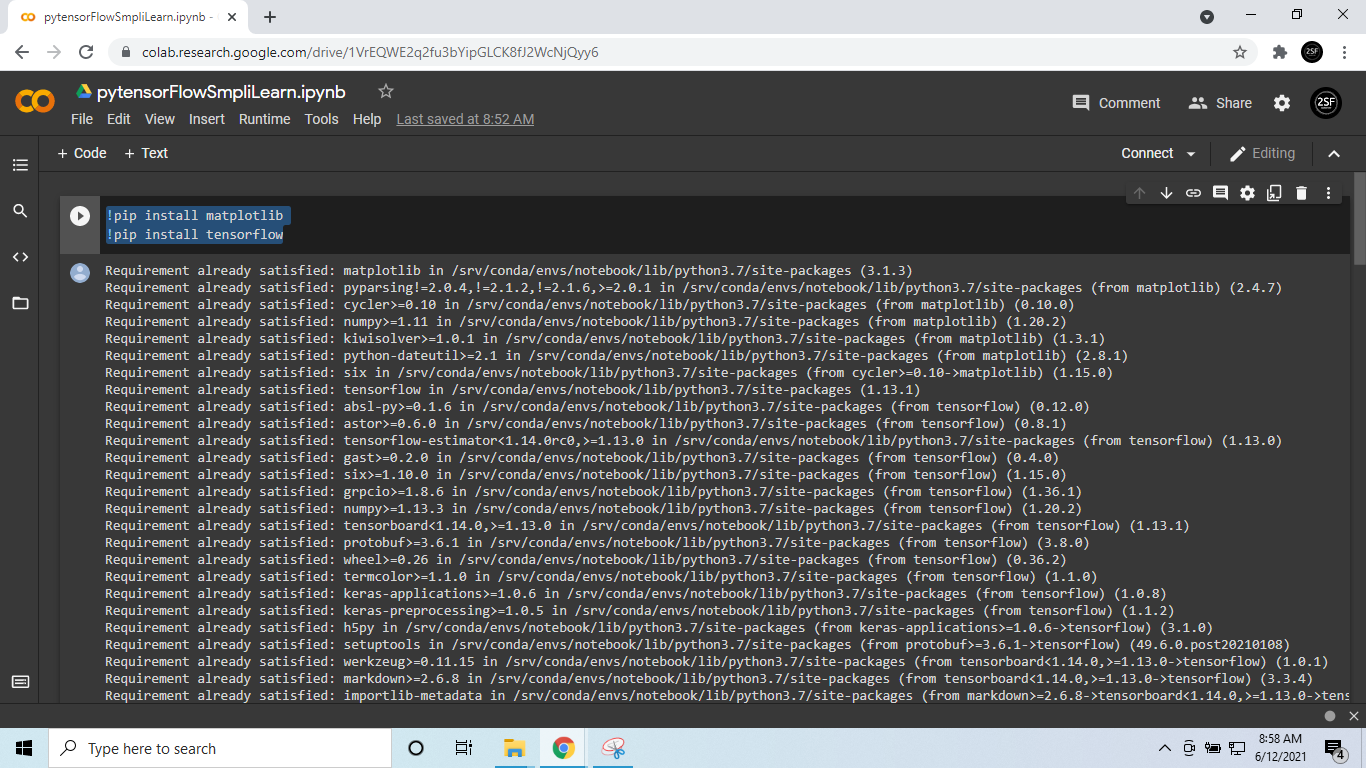

ensorFlow(TensorFlow ) example. you can simply run using 'Google colab' (https://colab.research.google.com/ ) or 'Jupyter note book' ( https://jupyter.org/try).

💻step one : you need to install

!pip install matplotlib

!pip install tensorflow

💻Step 2 :

import paxkages such as tensorflow ,numpy, matplotlib.

here you can view import coding.👇👇

import tensorflow as tf

import numpy as py

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

💻Step 3 :

coding.👇👇

mnist = input_data.read_data_sets("MNIST/",one_hot=True)

fig, ax = plt.subplots(10, 10)

💻Step 4 :

coding.👇👇

k=0

for i in range(10):

for j in range(10):

ax[i][j].imshow(mnist.train.images[k].reshape(28,28), aspect ='auto')

k +=1

plt.show()

💻Step 5 :(optional)

coding.👇👇

print("shape of feature matrix:", mnist.train.images.shape)

💻Step 6 :(optional)

coding.👇👇

print("shape of feature matrix:", mnist.train.labels.shape)

💻Step 7 :(optional)

coding.👇👇

print("one-hot encodeing for 1st observation:\n:", mnist.train.labels[0])

💻Step 8 :

coding.👇👇

x = tf.placeholder("float",[None,784])#train set

w = tf.Variable(tf.zeros([784,10]))#weight

b = tf.Variable(tf.zeros([10]))#bias

y=tf.nn.softmax(tf.matmul(x,w)+b)

y_ = tf.placeholder("float",[None,10])

cross_entropy = -tf.reduce_sum(y_*tf.log(y))

train_step = tf.train.GradientDescentOptimizer(0.01).minimize(cross_entropy)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init)

💻Step 9 :

coding.👇👇

for i in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(100)

sess.run(train_step,feed_dict={x:batch_xs,y_:batch_ys})

💻Step 10 :

coding.👇👇

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))#Prediction value

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print(sess.run(accuracy,feed_dict={x:mnist.test.images,y_:mnist.test.labels}))

Comments

Post a Comment